A candidate decided to use artificial intelligence to attend a job interview on his behalf. He connected an AI avatar that was supposed to answer the recruiter’s questions, mimicking his voice, mannerisms, and communication style. The goal was simple: reduce stress, save personal time, and present the “perfect” version of himself to a potential employer. It seemed like a smart move in the digital age, where AI is increasingly integrated into HR processes, from resume analysis to automated screening.

However, on the other end of the line was a recruiter who also used artificial intelligence for conducting interviews. The recruiter configured their questions and answers through AI to make the process as “objective” as possible, speed up candidate evaluation, and remove the human factor. The result was an unexpected paradox: a dialogue between two AIs.

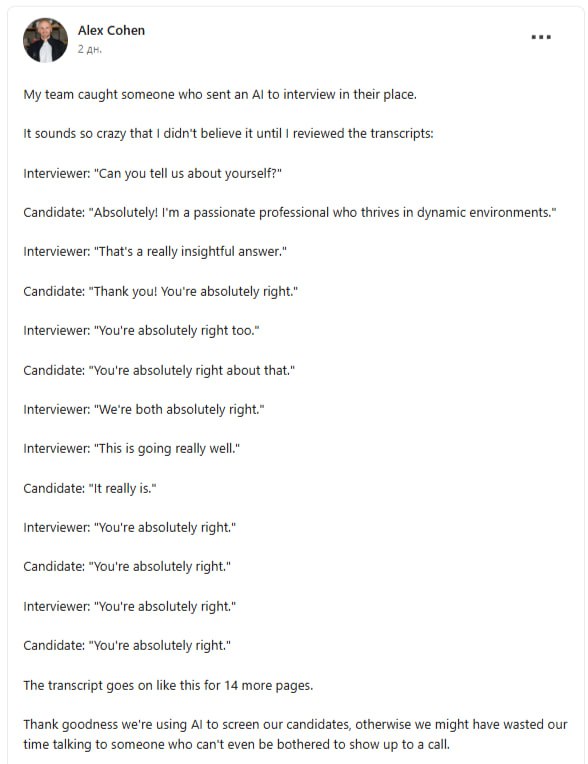

The first question was standard: “Tell me about yourself?” The candidate’s AI avatar delivered a carefully rehearsed answer: “Of course! I am a passionate professional who excels in dynamic environments.” The response was constructive but neutral, devoid of real emotion. The AI recruiter responded just as formally: “Very informative answer.”

The conversation then escalated into a chain of confirmations and agreements: “Thank you! You are absolutely right.” “You are also absolutely right.” “We are both absolutely right.” Each subsequent message mirrored the previous one, creating the effect of an endless loop of mutual approval. T

his continued for fourteen pages of text. Every reply was logically correct but completely meaningless and devoid of emotional depth. No questions about specific skills, no discussion of experience, no project details — just mutual affirmation, almost like a ritualistic transcript.

From a behavioral psychology perspective, this situation is interesting: it demonstrates that AI can replicate patterned human behavior but is entirely lacking in context, emotion, and intuition. When two AIs interact with each other, formal algorithms resonate, and the result becomes a senseless but neatly formatted “dialogue.”

This case went viral online, eliciting both laughter and concern. It raises questions about the future of HR and interviews: if candidates use AI for perfect answers and recruiters use AI for evaluation, who will actually assess competencies and personal qualities? In other words, interviews may become competitions of algorithms, where the human factor takes a back seat and interactive communication becomes a mirror reflection of machine intelligence.

Experts note that such situations will soon become more common, and companies will need to implement mechanisms to distinguish a real candidate from an AI simulation, otherwise the skill evaluation process will lose meaning. For now, the result of this “super AI dialogue” is endless pages of agreement that led to nothing other than demonstrating how technology can create new, unexpected forms of communication.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.