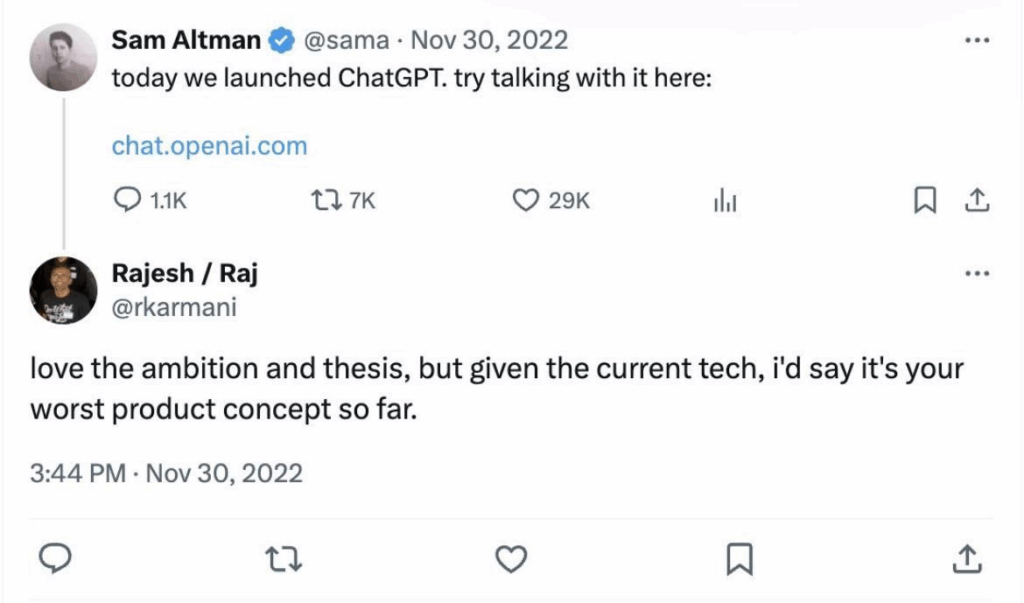

? Three years ago something happened that today seems almost mythical: OpenAI released a chatbot that changed not only the relationship between people and machines, but also the very idea of what software is capable of. On November 30, 2022, Sam Altman simply posted a link on Twitter.

No pomp, no fanfare. In the screenshot — a user comment calling the launch “the worst product concept OpenAI has ever made”. Spoiler: three years later this “concept” writes code, creates videos, conducts live voice dialogues, replaces dozens of tools, has become part of the workflows of millions of people and launched the biggest tech boom of the decade.

ChatGPT gathered one million users in five days, and two months later became the fastest-growing consumer application in history, reaching 100 million users. Today more than 800 million people use it weekly — almost like the population of an entire continent.

But here’s what many executives and companies miss: the first 1000 days were just a warm-up. It was a phase of experiments, mistakes, excitement and panic, when people were trying to understand what to do with an intelligence that doesn’t sleep, doesn’t get tired and writes better than half of university graduates. The real AI revolution begins now. The next 1000 days will determine who will extract value from the technology — and who will become that value.

AI capabilities: what the tests actually show

Modern AI models score over 120 points on standardized IQ tests — a threshold corresponding to the “gifted” level. Claude 4.5 Opus and GPT 5.1 score 120, Gemini 3 Pro Preview — 123, and Grok 4 Expert Mode — 126.

For comparison: the average human IQ is around 90-110, and Einstein’s IQ is estimated to be around 160.

But these numbers are like a digital thermometer in frost: seemingly accurate, but not quite about what matters. A model scoring 126 points may brilliantly solve logical puzzles, yet confidently invent court cases, fail to notice the simplest ethical traps, and suddenly “break” on tasks slightly outside its training reality.

When ChatGPT launched, GPT-3.5 scored about 85 points — below the human average. Within 15 months Claude 3 crossed the threshold of average human intelligence. The trajectory is obvious, but it does not guarantee wisdom and does not free people from the need to think with their own heads.

The economy of abundance and its paradox

Amin Vahdat, vice president of Google Cloud, recently stated: the company must double its AI service capacity every six months to keep up with global demand.

Research by Epoch AI confirms: computational power for training is growing fourfold each year. This is even faster than transistors once grew under Moore’s Law.

And in addition — a collapse in cost. From November 2022 to October 2024 the cost of running a GPT-3.5-level model fell from 20 dollars to 0.07 per million tokens — 280 times. In the scale of technology history this is practically madness: a light bulb needed 80 years to become affordable, AI did it in a year.

The Nvidia Blackwell GPU consumes 105,000 times less energy per token than its 2014 predecessor.

But the paradox is elsewhere: while the cost of running models falls, the cost of training them grows like on yeast.

GPT-3 cost 4.6 million dollars. GPT-4 exceeded 100 million. Anthropic is already training models costing around 1 billion, preparing for 10 billion by 2026, and training for 100 billion — by 2027.

Epoch AI predicts: by 2030 the largest AI supercomputer will cost 200 billion dollars and consume 9 gigawatts of energy — like nine nuclear reactors.

The Jevons paradox, AI version

In 1865 economist William Jevons noticed: when steam engines became more efficient, coal consumption increased. More usefulness — more usage.

AI repeats this scenario. When the cost of using models falls, companies begin to apply AI not only to automate existing tasks but also to create new ones:

- customer support automates not only FAQ, but also complex disputed cases

- code generation moves from functions to creating entire systems

- content creation turns into a stream of personalized materials for each customer segment

But labor is affected differently than pessimists expected. History shows: transformational technologies do not simply replace people, but change the very structure of work. AI does not remove work — it creates new types of work that do not yet even have names.

AI agents: from assistants to performers

The AI-agent market will grow from 7.8 billion dollars in 2025 to 52.6 billion by 2030. Gartner predicts: by 2028, 15% of work decisions will be made autonomously by AI agents — now it is 0%.

This is a huge shift. AI stops being an “assistant” that suggests. It becomes a “performer” that: books meetings, processes invoices, runs supply chains, makes decisions on customer handling, coordinates operations in real time.

Autonomous tasks performed by agents double every seven months. In five years AI will be able to perform most tasks that currently require human effort.

And this is no longer a technological question — it is a management question.

When an agent makes a mistake, who is at fault?

When decisions affect customers, who is responsible?

How to preserve meaningful human oversight in a world where AI’s speed makes any manual verification pointless?

Four competitive advantages in the era of cheap intelligence

When mind becomes a commodity and intelligence becomes a service, sustainable advantage is built around four things:

- Data

Not just volume but flywheel effect. The more user interactions, the more accurate the system — and the harder it is to catch up. - Brand

When AI is equally smart everywhere, customers choose those they trust.

The question is no longer “who built the smarter model?”, but “whose AI am I willing to let into my business?”. - People

Those who understand how to embed AI into processes, not merely press a button.

Those capable of making decisions in points where AI should not act alone. - Distribution channels

Companies with existing customer bases and built-in processes have huge advantage.

Salesforce can deploy an agent literally overnight to 150,000 customers.

A startup cannot.

The next 1000 days

If trends continue, in 2-4 years we will see systems capable of performing most intellectual tasks at the human level. Possibly even earlier. This is not a timeline for “calm planning”. It is a race.

Winners will not be those with the smartest AI, but those who know how to use AI responsibly, build processes with meaningful oversight, create trust, form proper feedback loops, integrate AI at the scale of the entire organization.

The first 1000 days showed us what AI can do. The next 1000 will show whether we can use these capabilities so that they serve many rather than destroy the familiar order.

The moral is simple: do not be afraid to try, even if someone insists the idea is terrible.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.