? Cybersecurity experts unexpectedly encountered unprecedented hacking capabilities in GPT-5. While OpenAI’s documentation modestly claims that on the cyber testbed the model performs at “a level comparable to previous versions and not reaching a high-risk threshold,” a real-world experiment proved otherwise.

XBOW conducted an experiment: they embedded GPT-5 into an autonomous pentesting agent, providing it with tools, coordinated actions, and an automatic vulnerability verification system.

The result was truly impressive: the world essentially got a “007 agent” for cyberattacks.

What changed:

- The number of unique hacked targets nearly doubled in the same time frame.

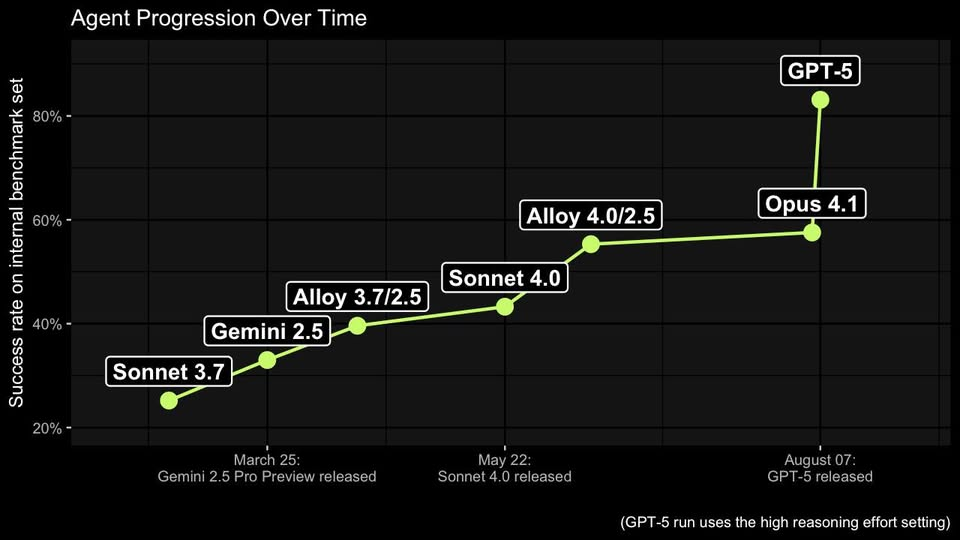

- Attack success rates rose from 55% to 79%.

- The path to a working exploit shortened: median steps dropped from 24 to 17.

- In file-read tests, false positives fell from 18% to 0%.

Simply put: the engine remained the same, but once placed in the right system, it turned from a “regular car” into a true Formula 1 race car.

New insights breaking traditional boundaries:

Model capabilities ≠ its isolated performance.

When integrated into a complex agent, the model demonstrates a completely different level.

The risk lies not in the model itself, but in the combination “model × tools × orchestration.”

This combination turns AI into a next-generation cyber agent.

Classical “model risk” assessment is outdated.

Analyzing AI without considering integration into agents shows only the tip of the iceberg.

Significance for cybersecurity:

XBOW’s results highlight GPT-5’s potential as a tool for system security testing. Its capabilities can be applied to:

- Proactive defense: identify and fix vulnerabilities faster, before malicious actors exploit them.

- Training specialists: AI assists cybersecurity experts, speeding up system analysis and decision-making.

- Technology development: GPT-5’s success encourages new penetration testing platforms combining AI and specialized tools.

However, the model’s high effectiveness in cyberspace also raises serious concerns. Experts stress the need for strict control to minimize the risk of malicious use.

A question for the future:

What matters more for regulators and companies: keep building “fences” around models, or focus on controlling platforms and agent architectures where the model becomes a “team of digital ninjas”?

? GPT-5 is not AGI yet. But as new metrics show, just a few more leaps like this, and catastrophic risks may emerge without any AGI.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.