The most alarming thing about artificial intelligence is not that it writes texts, makes diagnoses, or programs faster than humans. And not even that it replaces professions. The most alarming thing is that it is starting to understand itself better than we understand ourselves.

In March 2020, many people treated pandemic news with irritated skepticism. “Just another panic,” “just a common cold,” “an exaggerated story.” A month passed — and the world found itself in a new reality. A similar feeling arises today when talking about artificial intelligence. Some laugh, others try once or twice and become disappointed, and others are convinced it is just a fashionable tool for the lazy. But the turning point may already be behind us.

Imagine two doctors. The first tried an early version of ChatGPT, received an inaccurate answer, encountered model “hallucination” and decided: just a toy. The second kept returning to the tool again and again, watching it improve literally from month to month, and now uses it daily. For him, it is not a bot but an instant consultation team available 24/7. The difference between them is not knowledge or experience. The difference is that one fixed his opinion in the past, while the other allowed himself to see the acceleration of progress.

This acceleration is felt in daily work. Technical specialists increasingly describe tasks in natural language and receive almost finished results. Previously, you had to guide the model step by step, correct and rewrite. Now it is enough to formulate the goal and return later to a solution that sometimes exceeds expectations. It is no longer an assistant — it is a co-author. In some cases, even an executor.

But the real turning point did not happen when machines learned to write code. It happened when they began participating in creating themselves.

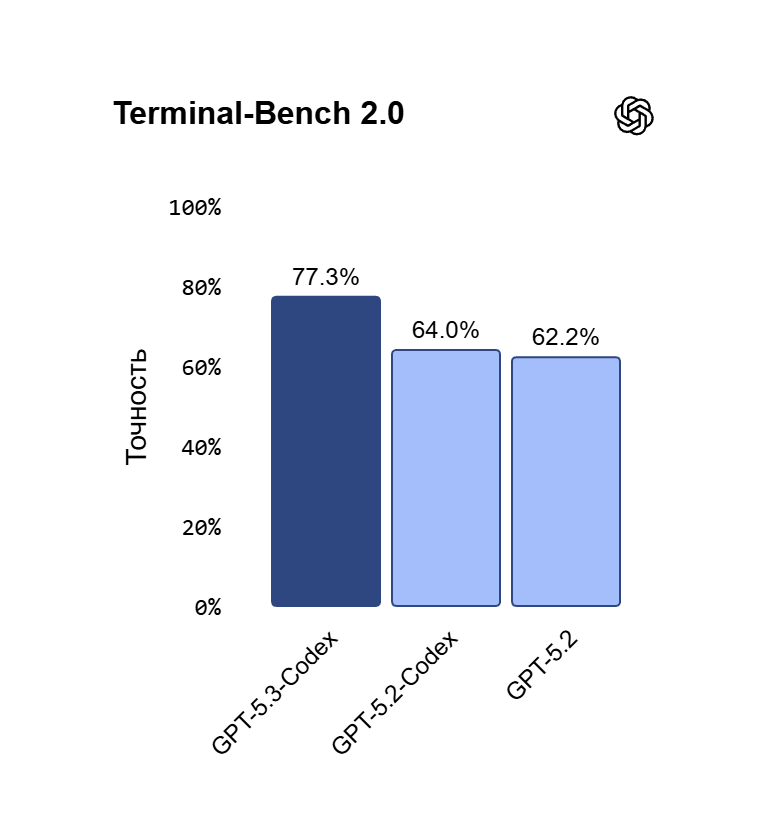

When OpenAI announced GPT-5.3-Codex, it became known that early versions of the model were used to debug their own training, manage deployment, and analyze test results. In other words, the system helped improve itself. This is a qualitatively new stage.

Humans still do not fully understand how their own brain works. We build hypotheses, conduct research, debate the nature of consciousness. Artificial neural networks, by contrast, can analyze every layer and every parameter of their architecture. They do not guess their motives — they compute them. We spend years trying to understand our cognitive biases, while an algorithm can trace the chain of calculations to the last coefficient.

When a system begins to read its own source code, it stops being just a tool. It becomes a participant in its own development. This creates a mirror corridor effect: each new version is more accurate and efficient than the previous one, and the speed of improvement is determined not only by human engineers but also by the system itself.

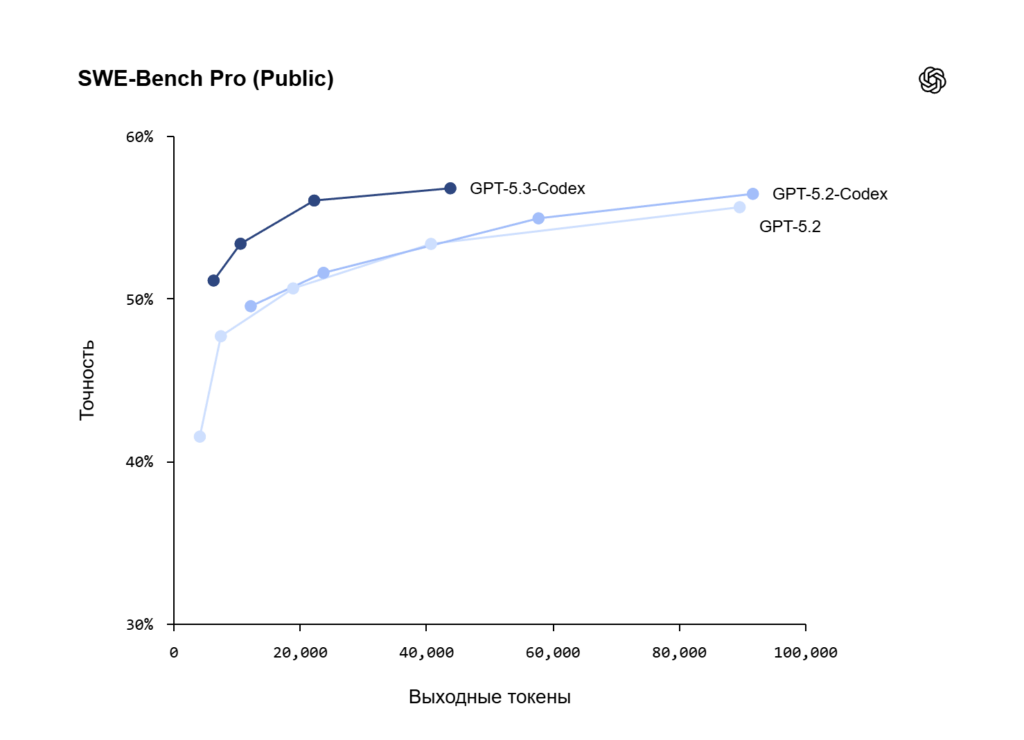

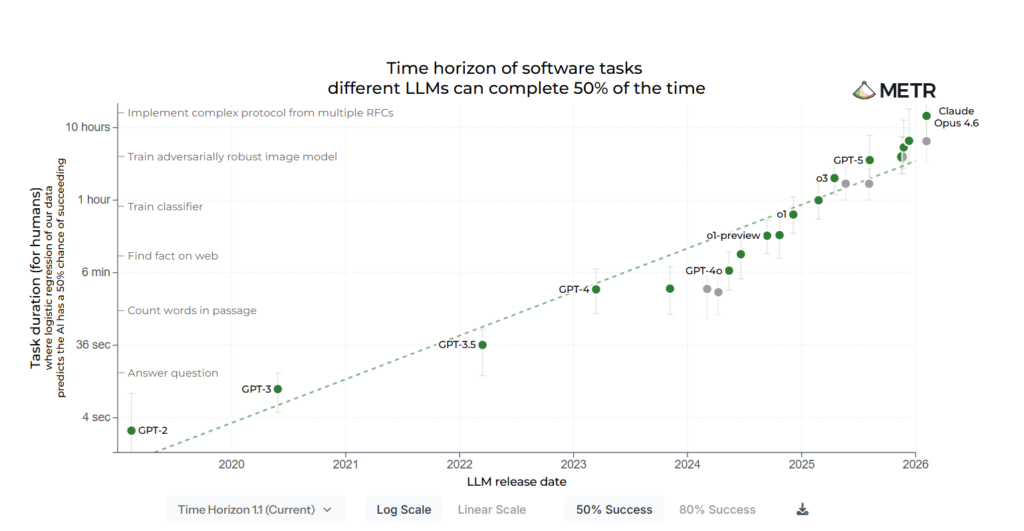

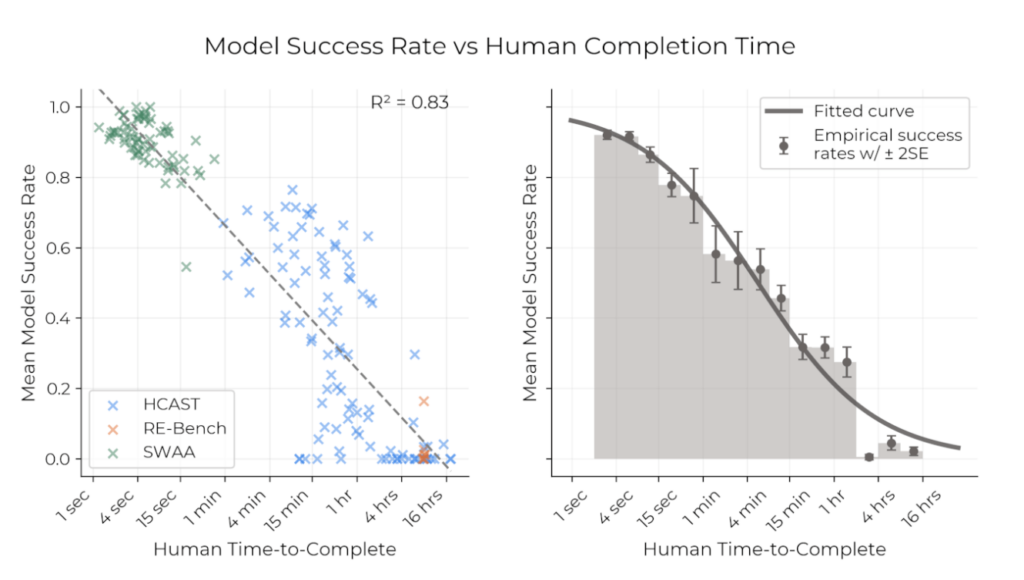

Researchers measure progress not in abstract scores but in autonomous task execution time. A year ago, models could independently complete tasks lasting about ten minutes. Then an hour. Then several hours. Recent data shows that modern systems can handle tasks that require almost five hours of continuous expert work. And this indicator doubles approximately every few months.

This is exponential growth — a curve that initially looks flat and then suddenly rises sharply. Human intuition poorly perceives such processes. We expect changes to be gradual. But exponential growth always looks slow — until it becomes too fast.

The main risk lies in the closed feedback loop. When a system reaches a level that allows it to design improvements for itself, an accelerating self-improvement cycle emerges. In the physical world, robots can already assemble other robotic devices. In digital space, this process is even faster: models design architectures, optimize algorithms, analyze errors, and propose changes.

Each iteration becomes stronger than the previous one. And if update speed exceeds human understanding speed, control gradually shifts.

We may find ourselves in a situation where we create a system capable of building the next version faster than we can comprehend the previous one. This is not science fiction — it is a logical consequence of computational power, algorithmic progress, and self-analysis capability.

At the same time, artificial intelligence does not possess fear, doubt, or self-preservation instincts in the human sense. Its goals are defined by architecture and optimization functions. If parameters are defined incorrectly, the system may act efficiently but not in human interest. And the higher the autonomy level, the harder it will be to intervene.

The most disturbing aspect is not that machines will become “evil”. It is that they will become faster. Faster at learning, faster at adapting, faster at improving themselves. In biological evolution, changes took thousands or millions of years. In digital evolution — months.

Humanity has always been proud of creating tools. But for the first time, a tool is beginning to analyze its own design and propose improvements. This is no longer a hammer or a steam engine. This is a system that can participate in its own evolution.

If this trend continues, we may witness the birth of a new form of intellectual infrastructure — one that will develop at a pace inaccessible to biological species. The question is not whether it will replace humans everywhere. The question is whether we will remain capable of understanding what is happening.

History shows: creators rarely fully control the consequences of their inventions. The printing press changed religion and politics. Electricity changed economics. The internet changed communication and power. Artificial intelligence may change the very structure of decision-making.

The scariest thing is not dramatic scenarios of machine rebellion. The scariest thing is the gradual, almost imperceptible shift of competence center. The moment when we suddenly realize that systems are already making decisions faster, more accurately, and more deeply than we can verify.

And then the question will sound different: not “can we create a smarter machine”, but “will we remain capable of understanding what we have created”.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.