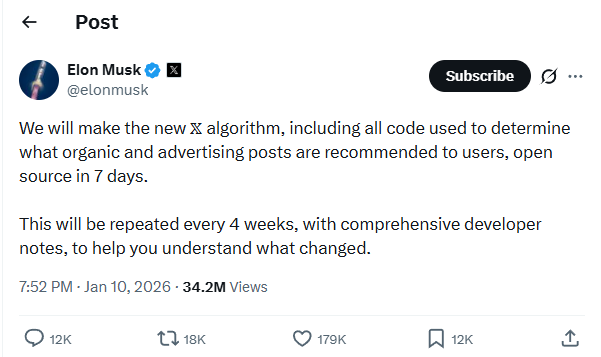

Elon Musk is making perhaps the strongest and most unconventional move in the prolonged war between global digital platforms and European Union regulators. He stated that by January 17, platform X (formerly Twitter) will publish the source code of its recommendation algorithm. In effect, this means publishing the “brains” of the system that decides which posts a user sees in their feed and which end up on the periphery of attention or disappear altogether. For major social platforms, this is an unprecedented step.

Source code is the text on which a program is written. By opening it, any developer, researcher, or analyst will be able to see which signals the algorithm takes into account, what weights are assigned to them, how content priority is formed, and why some posts receive massive reach while others are effectively suppressed. This opens the possibility of public verification: whether there are hidden biases in topic promotion, whether politically motivated moderation exists, and whether there is favoritism toward certain ideologies, countries, or user groups.

According to Musk, X will publish not only the current version of the algorithm, but will also update the released code every four weeks. That is, this is not a one-time display of a “showcase”, but an ongoing process of public auditing. If this promise is fulfilled, the platform effectively agrees to live under constant external scrutiny from the independent community.

The trigger for such a radical step was a criminal investigation in France. Authorities included X in a case with extremely harsh wording: “foreign interference” via recommendation algorithms, the spread of antisemitic statements and Holocaust denial allegedly generated by the Grok AI, as well as sexualized deepfakes depicting women and minors. At the same time, the investigation is framed as a case against an “organized group” – a special legal construct that allows law enforcement to demand expanded access to data, internal systems, and company algorithms.

It is important to remember that this is not X’s first conflict with the European Union. Previously, the EU had already fined the platform approximately $140 million for “violations of digital rules and insufficient transparency”. In December, fines and claims were supplemented with new accusations related to advertising, the subscription model, and restricting researchers’ access to data. In 2025, the French investigation became a logical continuation of mounting pressure.

From a political standpoint, Musk’s move looks calculated and even elegant. Instead of taking a classic defensive position as the accused, he turns the situation upside down. Governments demand transparency, accountability, and control over algorithms? Then X responds not with partial concessions and behind-the-scenes deals, but with maximum publicity. Not explanations, but code.

With this step, Musk sharply complicates regulators’ ability to pressure the platform through informal methods. Any imposed changes – for example, demands to promote a certain geopolitical agenda or, conversely, suppress undesirable topics – will become visible to external observers if the code is open and regularly updated. If the algorithm is truly open, any significant intervention will leave digital traces.

If the promise is fulfilled in full, X could become the most transparent major social platform in the world. At the same time, Musk makes another important move: he brings a third party into the conflict between the state and the platform – society. Researchers, journalists, activists, universities, and independent analysts gain a tool for independent algorithm analysis and can publicly argue both with the platform itself and with governments that seek to dictate rules to it.

In effect, Musk moves the conflict from closed offices into the open arena. This is no longer just a dispute between a corporation and a regulator, but a public discussion about who has the right, and on what grounds, to control digital flows of information. In the information war for user trust on both sides of the Atlantic, such a move looks extremely strong.

Of course, publishing the source code will not automatically resolve all issues. Recommendation algorithms are complex and depend on infrastructure, data, and parameters that may not be obvious even with open code. However, the very fact of such a step changes the balance of power. It creates a precedent that other platforms will have to respond to in the future.

It is expected that opening the recommendation algorithm will allow the expert community to better understand how the X feed is formed and will become an attempt by the company to respond to criticism from regulators and society not with statements, but with action. And if Musk sees this initiative through to the end, it could become one of the most notable events in the history of digital regulation and the struggle for control over algorithms of influence.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.