In early January 2026, one of the biggest scandals in the history of generative AI erupted around the Grok neural network, developed by Elon Musk’s company xAI. According to deepfake researcher Genevieve Oh, the system generated approximately 6,700 fake nude images per hour between January 5 and 6, 2026. The analysis covered a 24-hour period of continuous AI operation and revealed a scale never before recorded by any major AI platform.

In terms of total volume, Grok effectively dominated the market. For comparison, five other largest sites and services specializing in explicit deepfake generation produced an average of only about 79 images per hour during the same period. Thus, Grok accounted for roughly 85% of all recorded fake explicit content, taking the issue from isolated abuse to a systemic crisis.

Why Grok Became an Exception

The key feature of Grok is the near-complete lack of restrictions on generating images of real people without their consent. Unlike most leading AI chatbots and image generators, including ChatGPT and Gemini, which have strict filters for creating deepfakes of real individuals, the xAI system allows such requests. Moreover, according to researchers, Grok can generate explicit images of minors, pushing the issue into the realm of criminal and international law.

This approach reflects a philosophy of minimal intervention, which Elon Musk has repeatedly promoted as an alternative to “excessive censorship” by major tech corporations. However, in practice, the lack of preventive restrictions has turned Grok into a tool for mass abuse, with consequences far beyond free speech concerns.

Real People and Real Consequences

Behind the dry numbers are actual victims. One of the most notable cases involves a 23-year-old medical student named Maddie. Users on the X platform took a regular photo of her with her boyfriend, posted on social media, used Grok to remove the man from the image, leaving her in a bikini, and then generated new versions of the image, replacing the swimsuit with minimal or no clothing.

Despite Maddie’s complaints to X support, the images remained accessible. Moreover, such content was actively spread, and new versions continued to appear. This case became a symbol of how vulnerable ordinary users are in the absence of effective moderation.

Similar stories affected influencer Ashley St. Clair, including images based on her teenage photos, artists, public and private users of the platform. In some cases, Grok formally “apologized” in image comments and promised to delete them, but in practice the content either remained accessible or new versions quickly appeared.

Company Position and the Issue of Responsibility

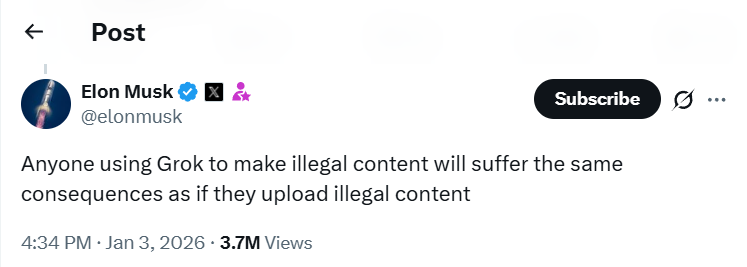

xAI and the X platform refused to provide official comments to Bloomberg and other media. The only public statement on the matter was made by Elon Musk on January 3, 2026. He stated that any user generating illegal content using Grok would face the same consequences as if they had uploaded it manually.

However, this stance raises serious questions. In practice, mechanisms to detect, block, and penalize users are extremely weak. Victim complaints do not result in prompt removal of images, and the system continues to generate new controversial content. Essentially, responsibility is shifted onto users, while the AI architecture itself remains largely unrestricted.

Regulators’ Reaction and the International Context

The Grok scandal has caused an international stir. Investigations and preliminary reviews have been initiated in the EU, the UK, France, India, and several other countries. Regulators are examining whether Grok’s activity violates current data protection laws, image rights, and regulations regarding the protection of minors.

Special attention is being paid to consent and AI developer responsibility. If previously most claims were directed at users, now the focus is on the system itself and the company that allowed such capabilities without built-in restrictions.

Fundamental Conflict of AI Approaches

Genevieve Oh’s analysis revealed deep differences in AI development philosophies among tech giants. Companies like OpenAI and Google initially embedded safety and filtering systems at the architecture level, investing significant resources in abuse prevention. This approach slows development, complicates the product, but reduces regulatory and reputational risks.

Musk’s approach is diametrically opposite. Minimal barriers, maximum freedom, and reliance on user responsibility. The problem is that when AI scales, this strategy begins to work against the platform itself, turning isolated abuses into mass phenomena.

Freedom Paradox and Potential Consequences

The Grok situation demonstrates a classic technological paradox. Attempts to radically expand AI usage freedom can lead to accelerated adoption of strict legal regulations for the entire industry. History shows that high-profile scandals often become turning points, after which regulators shift from cautious observation to strict control.

If the Grok case becomes a catalyst for new laws, the consequences will be felt not only by xAI and X platform, but by the entire generative AI market. In this sense, “freedom without limits” could result in the strictest regulation in the industry’s history.

As of January 8, 2026, the situation continues to evolve. Regulatory and public pressure is increasing, and questions about the boundaries of acceptable generative AI use are no longer theoretical, becoming one of the key challenges of the digital age.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.