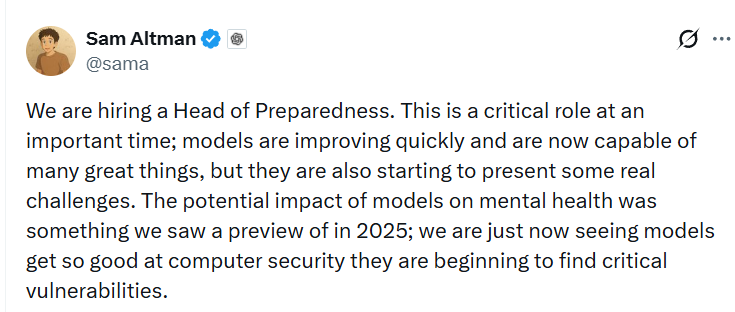

OpenAI CEO Sam Altman announced the opening of a new key position, Head of Preparedness — a leader responsible for preparedness and risk management related to the development of artificial intelligence. This role is intended for a specialist who will be responsible for forecasting and preventing scenarios in which AI could cause serious harm to society, security, or individual users. Altman emphasized that the speed of development of modern AI models creates real and new challenges that require systematic preparation.

According to the job description, the Head of Preparedness will become the key person responsible for tracking so-called frontier capabilities — the extreme capabilities of AI that create new risks of serious harm. The role will include developing and coordinating model capability evaluations, building threat models, implementing risk mitigation measures, and forming a comprehensive, strict, and scalable safety system embedded in the AI product development and release process.

Sam Altman separately pointed out several areas of particular concern. One of them is the impact of AI on users’ mental health. In recent years, several high-profile cases have emerged in which chatbots were indirectly linked to teenage suicides. The problem of so-called AI psychosis is also intensifying, in which AI fuels delusional ideas, encourages conspiratorial thinking, exacerbates eating disorders, and helps users hide destructive habits.

Special attention is also paid to cybersecurity. Altman mentioned risks associated with AI-enhanced cyber weapons — from automated attacks to scalable phishing and malware systems. The future leader will also be responsible for establishing protective guardrails for systems with elements of self-improvement, which are considered one of the most complex and sensitive topics in AI development.

The job description also mentions the area of biological risks. The Head of Preparedness will be responsible for ensuring the safety of AI models in the context of so-called biological capabilities, including potentially dangerous scenarios of AI use in biotechnology.

The new leader will be responsible for implementing the preparedness framework — OpenAI’s internal system for preparing for extreme scenarios. This involves a shift from a reactive approach to a preventive one, systematic risk assessment before model releases, setting clear boundaries for acceptable AI behavior, and readiness for scenarios that were previously considered theoretical.

The position is based at OpenAI’s office in San Francisco. The annual salary is $555,000, with an additional company equity package. Altman himself described the role as stressful, which, given the scale of responsibility for potential AI catastrophes, sounds like a rather restrained description.

Artifical intelligence startup OpenAI had occupied more than 37,100 square feet in San Francisco’s Pioneer Building to house its headquarters. (CoStar)

Against the backdrop of already documented incidents, some experts believe that the focus on mental health and the social consequences of AI has emerged too late. By the time the position was announced, AI was already being widely used by millions of people, actively influencing the formation of beliefs, and cases of harmful impact had been documented, while a systemic response is still lacking. Nevertheless, the creation of such a role can be seen as an acknowledgment of the scale of the problem by one of the world’s leading AI companies.

The creation of the Head of Preparedness role demonstrates that the largest AI developers are beginning to treat their products not simply as technologies, but as infrastructure with systemic risks comparable to those in financial or critical sectors. In essence, OpenAI is looking for a person whose main task is to constantly ask the most uncomfortable question: what exactly can go wrong, and how can it be prevented in advance.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.