The end of 2025 marked a turning point for the artificial intelligence industry. After the rapid development of language models and generative imagery, major technology companies took the next step — moving toward the creation of so-called “world models.” These are AI systems capable of generating full-fledged three-dimensional environments with internal logic, physics, and interactive capabilities.

Google DeepMind, Meta, Elon Musk’s xAI, and the startup World Labs have already entered the race. Experts increasingly compare the importance of this direction to the emergence of ChatGPT, calling it the foundation for future intelligent machines.

What Are “World Models” and Why They Matter

World models are AI systems that do not merely describe reality with words or images but create a digital analogue of it. In these virtual environments, the laws of physics apply: objects have weight and inertia, light reflects off surfaces, characters respond to user actions, and space follows cause-and-effect relationships.

The key difference from video games lies in how these worlds are created. Traditional game worlds are manually designed by teams of designers, artists, and programmers. World models are generated automatically — from a text prompt, an image, a video, or a combination of inputs. Essentially, the AI “imagines” a space and immediately makes it available for interaction.

From the perspective of AI researchers, this represents a qualitative leap. Such systems begin to understand not only language or images but also the structure of the surrounding world. This understanding is considered a necessary condition for the emergence of more general and autonomous artificial intelligence.

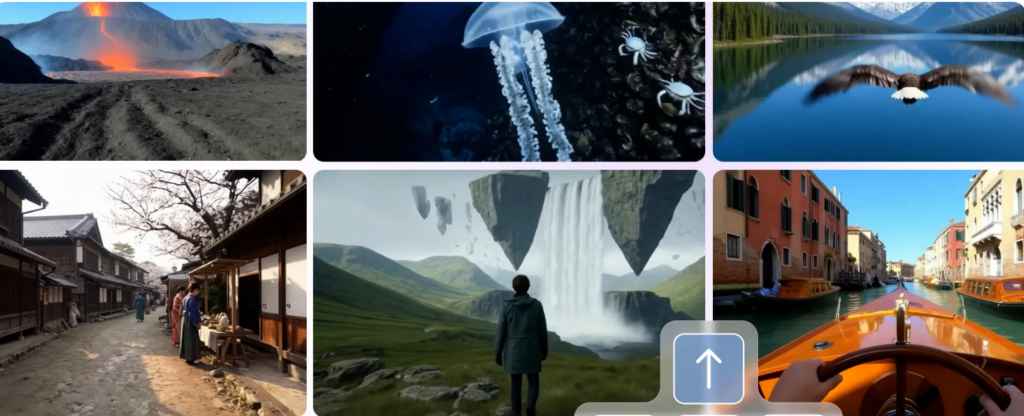

One of the most notable releases was the Genie 3 model, presented by Google DeepMind in August 2025. It is the first “world model” capable of generating interactive 3D environments in real time at 24 frames per second and 720p resolution.

Genie 3 can maintain the coherence of a virtual world for several minutes, which is a significant improvement over earlier experimental solutions where scenes quickly “collapsed” and lost logical consistency. Users can freely move through the environment, interact with objects, and modify the surroundings using text commands — for example, changing the weather, the time of day, or adding new elements.

Google is testing Genie 3 in combination with the SIMA agent, which performs tasks within the generated worlds. This is an important step toward training universal AI agents capable of adapting to new environments without manual configuration for each situation.

The startup World Labs, founded by renowned AI researcher Fei-Fei Li, is focusing on “spatial intelligence.” The company raised $230 million in funding and launched its first commercial model, Marble, in November 2025.

Unlike Genie 3, Marble is designed to create persistent, savable 3D spaces. Worlds can be generated from text, images, videos, or panoramas, then edited, saved, and exported for use in third-party applications. This makes Marble particularly attractive for the gaming industry, architecture, and design.

World Labs is already collaborating with game studios, offering tools for rapid prototyping of levels and environments through a browser. Essentially, developers gain access to an “on-demand 3D engine” that significantly reduces the time and cost of content production.

Meta has focused on the practical application of world models for AI training and robotics. The Habitat 3.0 platform is designed to simulate everyday and social scenarios in which virtual robots and assistants learn to interact with environments and people.

Habitat 3.0 supports detailed human avatars, realistic physics, and multi-agent collaboration. Through VR interfaces, researchers can observe how AI performs tasks — from cleaning rooms to assisting humans with daily activities.

For Meta, this is a strategic direction related less to entertainment and more to the creation of future autonomous assistants and household robots.

Elon Musk has also joined the effort. In October 2025, his company xAI hired two researchers from Nvidia — Zishan Patel and Ethan He — who had previously worked on world models for the Omniverse platform.

Musk announced plans to release a fully AI-generated video game by the end of 2026. In such a game, everything — from landscapes and characters to the storyline and dialogues — will be created and modified in real time by artificial intelligence. This aligns with Musk’s broader vision of AI not merely as a tool but as a full-fledged creator of digital worlds.

How AI Worlds Differ From the Metaverse

Despite superficial similarities, world models fundamentally differ from the concept of the metaverse. The metaverse envisions a persistent virtual space for human communication and interaction, created and maintained by developers.

AI world models are oriented toward something else. They generate temporary, task-specific environments — for AI training, robot testing, or content generation. These worlds are not designed for long-term human “residence” and may disappear immediately after fulfilling their purpose.

While creating a metaverse location can take months of work, a world model can generate a new environment in seconds. Each world is unique and adapted to a specific goal — whether simulating traffic, training a robot, or developing a game level.

Practical Applications

The most obvious application of world models is in training robots and autonomous vehicles. Simulations allow millions of scenarios to be tested without the risks and costs associated with real-world trials.

Video games remain one of the closest markets for commercialization. The industry, with revenues of around $190 billion, is already actively adopting AI for content generation, and world models could radically transform the development process.

Additionally, these technologies are finding applications in construction and architecture, where they enable modeling of lighting, human flows, and structural loads. In medicine, they can be used for virtual experiments with procedures and drugs, improving diagnostic accuracy and reducing risks.

The development of world models raises serious concerns about energy consumption. Generating and maintaining real-time 3D worlds requires significantly more computational resources than running language models. Data centers already consume about 1% of global electricity, and widespread adoption of such systems could substantially increase this share.

Historically, a similar situation occurred in the 1990s with the development of 3D graphics, when competition was not only about technology but also about standards and efficiency. As history shows, the winner is not the first to release a product, but the one who manages to scale it at acceptable costs.

The transition from text-based AI to spatial systems represents a paradigm shift. Artificial intelligence ceases to be merely a “talking machine” and begins to understand and model the world in three dimensions. This makes world models one of the key technologies of the coming years, with a major impact on the future of robotics, autonomous systems, and digital entertainment.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.