🤖 Google has turned Maps into a “talking navigator” powered by Gemini intelligence.

Now you can ask not only “where to go?” but also “where do they serve the best pizza along the way?” — and hear not dry coordinates, but a meaningful answer from AI.

Google has officially unveiled a major update to Google Maps, integrating its flagship AI model Gemini. This turns the familiar Maps into a full-fledged “smart companion” capable not only of building routes but also of holding a conversation, assisting on the road, suggesting events, and even planning your day — all by voice, hands-free.

How it works

Users can now talk to Maps like to a chatbot: ask follow-up questions, request recommendations, get news, or even ask to add a meeting to the calendar.

For example: “Show me vegetarian restaurants along the route” — Gemini will reply, suggest options, and ask: “Would you like me to check where parking is available nearby?”

The AI supports natural conversational context, so it understands follow-ups without requiring users to restate their query every time.

On the road — like a co-pilot with a sense of humor

Gemini can now answer questions about places along the route — from the nearest cafés and museums to sports arenas.

Moreover, it can read news, weather, or sports results aloud if the driver asks.

An interesting new feature — the ability to report traffic incidents by voice. Now you can say: “There’s an accident on the highway” — and the system will mark it on the map, alert other drivers, and reroute your path.

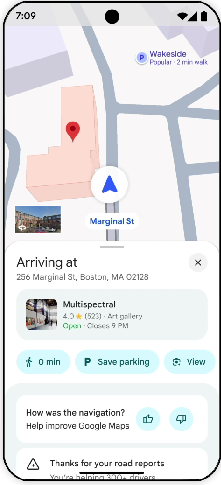

Next-generation navigation

Google has redesigned the visual navigation: AI now uses Street View data for more “human-like” orientation.

Instead of the dull “Turn in 300 meters,” Maps will say: “Turn after the Starbucks café” or “past the Shell gas station.”

AI recognizes real-world landmarks, highlights them on screen, and makes navigation intuitive — as if a sharp-eyed passenger were sitting next to you, not a robot.

Smart vision: Gemini + Google Lens

Integration with Google Lens adds “vision” to the AI. Just point your camera at a building, monument, or landmark — and Gemini will tell you what it is, why it’s popular, and provide links for more details.

Scale and numbers

Google claims Gemini relies on data from over 250 million places and billions of Street View images.

This allows AI to identify landmarks more accurately, predict traffic, and improve real-time routing.

When and where

- The new features will gradually roll out on iOS and Android devices.

- The U.S. will be the first to receive them — within weeks:

- Voice alerts about traffic and accidents will appear on Android,

- The updated visual navigation and Lens mode with Gemini — by the end of the month

- Europe and Asia will follow in 2026, after localization and adaptation to regional services.

Why it matters

According to Google representatives, integrating Gemini is a step toward creating “smart maps” that understand people, not just commands.

It’s part of the company’s “AI first” strategy, where AI becomes the default interface — not only in search, but also in navigation, smartphones, and even cars.

💬 Conclusion (slightly ironic)

Google Maps have finally become what navigators always wanted to be — a friend who knows the way, jokes along the route, and never argues about where to turn.

Now all that’s left is for it to refuel the car by itself.

A video clip of the project can be seen on our Telegram channel.

All content provided on this website (https://wildinwest.com/) -including attachments, links, or referenced materials — is for informative and entertainment purposes only and should not be considered as financial advice. Third-party materials remain the property of their respective owners.